Kafka Producer Properties Calculator

Optimize your Kafka producer properties for maximum throughput with minimal network footprint

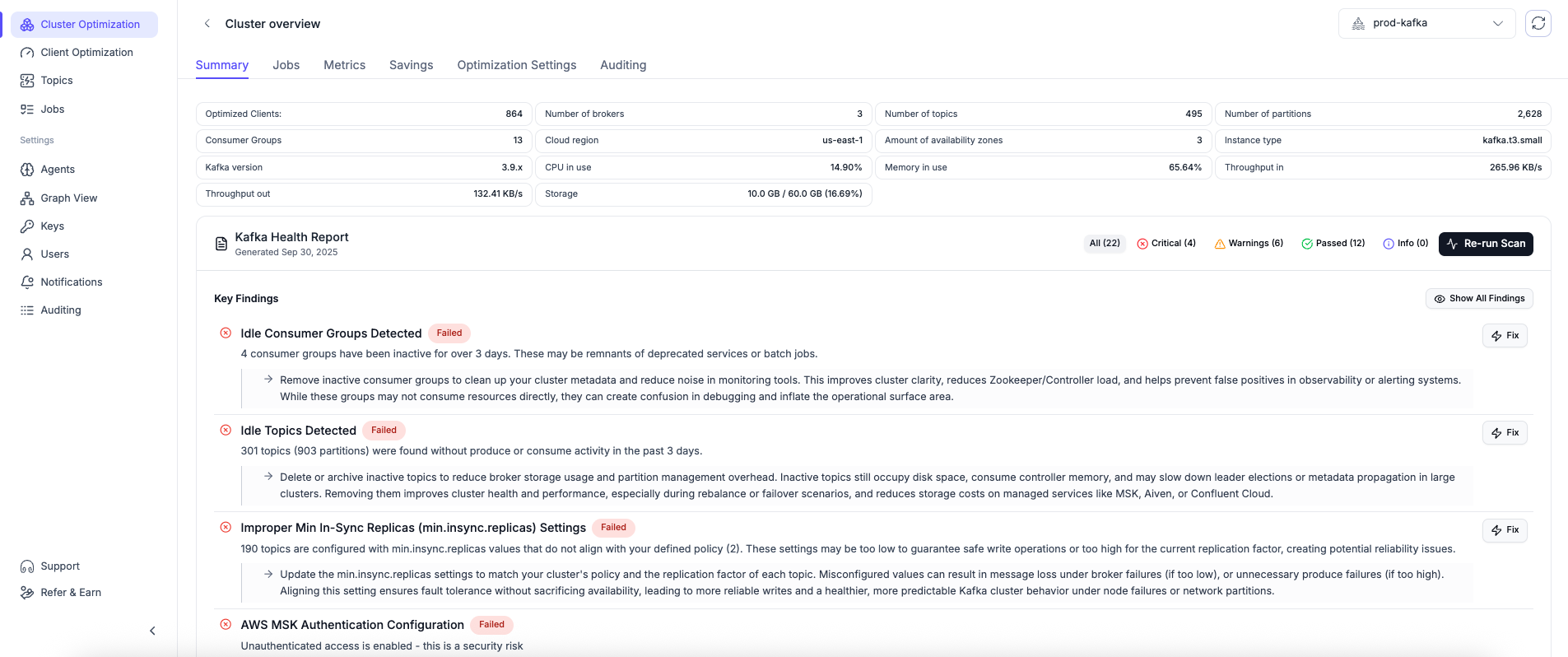

Are you sure your Kafka clusters are properly configured?

Run our free analyzer to get a detailed report on your Kafka cluster configuration and health.

Recommended Producer Properties

Expected Performance

Reliability Trade-offs

Understanding Key Settings

batch.size

Controls the amount of memory in bytes used for batching records awaiting transmission. Larger batches lead to higher throughput but might increase latency.

linger.ms

How long to wait for additional messages before sending a batch. Higher values increase throughput at the cost of latency.

buffer.memory

Total memory the producer can use to buffer records waiting to be sent to the server.

max.in.flight.requests.per.connection

Maximum number of unacknowledged requests the client will send before blocking. Higher values increase throughput but can affect ordering with retries.

compression.type

Compression algorithm applied to batches. Compression reduces network bandwidth usage but uses CPU resources.

acks

Controls durability guarantees. "all" ensures all replicas acknowledge writes (slowest but most durable), "1" waits for just the leader (faster), "0" doesn't wait for acknowledgment (fastest but least durable).